A.1 Introduction

The larger document provides an overview of the P&T life cycle and efforts relevant to optimization; this appendix includes additional details of specific concepts, tools, and considerations that are important to evaluating and understanding P&T systems. This information has been included here rather than in the main document to maintain brevity while providing detail, if desired.

Concepts addressed within this appendix are organized into self-contained subsections. Please refer to the table of contents below:

- A.1 Introduction

- A.2 Modeling

- A.2.1 Modeling Limitations

- A.3 Calculations

- A.3.1 Operating Cost versus Concentration

- A.3.2 Specific Capacity of a Well

- A.3.3 Vertical Gradients and Groundwater Flow Directions

- A.3.4 Capture Zone Analysis

- A.3.5 Discharge Limit Calculations

- A.3.6 Concentration versus Time

- A.3.7 Rebound Testing

- A.3.8 Spatial Moments Analysis

- A.3.9 Monitoring-Well Optimization

- A.3.10 Plume Stability Evaluation

- A.3.11 Readily Available Software

- A.4 Conceptual Site Model

- A.5 Adaptive Site Management Strategies

A.2 Modeling

A groundwater model is a computer representation of an aquifer system based on flow and/or transport equations to simulate groundwater and contaminant behavior. The computer representation is typically a simplification of actual conditions described in the CSM, and the equations quantify physical and/or chemical processes of the aquifer system.

Models allow complex hydrogeologic systems to be represented for purposes of evaluating physical and chemical behaviors and can significantly improve understanding of site hydrogeologic conditions. If the hydrogeological condition is relatively stable over time, a 2D or 3D steady-state flow model might be sufficient. A transient flow model might be needed for more complex site conditions to simulate variations in groundwater levels due to seasonal fluctuations in precipitation, multiple recharge sources, and complicated groundwater pumping regimes.

For P&T systems, a groundwater flow model may be used together with particle tracking analysis to determine the target pumping rates during the design phase or to assess the hydraulic capture of the groundwater plume during performance evaluation. Both forward and backward/inverse particle tracking can be used to evaluate the plume capture of a P&T system. Depending on where and how particles are released, the capture analysis results might be slightly different. A groundwater flow model coupled with particle tracking can show how effectively the groundwater plume is being contained by a P&T system, but it cannot answer how soon the groundwater plume is expected to be cleaned up. Particle tracking is an approximate approach to evaluate plume capture because it only assesses the hydraulic (or advective) groundwater movement and does not account for other transport processes such as diffusion/dispersion, retardation, and potential degradation of contaminants.

A contaminant transport model can be used to provide an estimate for the cleanup time frame based on a site-specific CSM with thorough understanding of the features and processes that control the contaminant source release, mass transfer, and transformations in the aquifer system. Tailing and rebounding of contaminant concentrations in groundwater often occur at P&T sites for various reasons such as persistent sources (NAPL, vadose-zone infiltration, solids dissolution), matrix diffusion, and stagnant zones. To evaluate the performance of a P&T system with tailing and rebound conditions, contaminant transport models can be used to simulate the physical and chemical processes that may cause the contaminant concentration to tail and/or rebound during and after active P&T operation, although these processes increase uncertainty in modeling predictions. The modeling tool selected for the contaminant transport simulation must be capable of properly handling these site-specific features and processes, such as NAPL dissolution, matrix diffusion, and dual-porosity conditions.

The general types of models that can be used to evaluate and optimize P&T systems are described in Table A-1.

Table A-1. Models used to evaluate P&T systems

| Model Type | Approximate Time Frame to Complete Modeling | Data Needs | Examples* |

| Analytical | hours–1 day | hydraulic gradient and parameters (K, storage coefficients; decay coefficients, retardation factors); concentrations; matrix diffusion | BIOSCREEN, BIOCHLOR, REMChlor-MD |

| Analytic Element | hours–days | same as analytical + extraction/injection, internal boundaries, recharge | WinFlow, AquiferWin32, AnAqSim, GFLOW |

| Numerical | 1 week–months | same as analytic element + diffusion/dispersion, matrix diffusion, boundary conditions, conductance | MODFLOW, FEFLOW, FEMWATER, MT3D, MODPATH |

Note: K = hydraulic conductivity.

Note: The various models accommodate both steady-state and transient properties. Analytical and analytic-element models are typically homogeneous, and numerical models are often heterogeneous and can also incorporate dual-porosity conditions.

*These are just a few examples. A modeling/hydrogeology expert should be consulted to select the right tool for the project.

Analytical models are useful to understand basic plume transport, but simulation of P&T systems typically requires simulation of more complex conditions. Numerical 2D and 3D and analytic-element models are, therefore, more likely to be used to simulate P&T activities. All models are developed based on the CSM, calibrated to the observed data (e.g., distribution of hydraulic heads or concentrations), recalibrated (if needed) to observed changes in groundwater behavior due to imposed stresses, verified based on data sets not used in the calibration, and applied either to investigate the performance of the existing P&T system or in the predictive mode (e.g., system optimization—see Section 4). Given the relatively large uncertainty in forecasting cleanup times, model forecasts should ideally present a range of conditions factoring the uncertainty to ensure that stakeholders do not develop a false expectation about the ability of models to accurately forecast cleanup times.

A.2.1 Modeling Limitations

Because models are simplifications of real systems they may not correctly or sufficiently represent important characteristics of the simulated system. This limitation can often be overcome with model calibration and increased parameterization, but some models, by exigencies of budget or time, may only provide relative comparisons or evaluations. In addition, a model can be over calibrated and over parameterized, resulting in simulations that are only reliable for the exact location and time for which they were constructed. Since a model is often used to predict conditions at some distal location or at some future time, an over-calibrated model is in many ways less useful than a simple broadly calibrated model. It is therefore important to clearly understand the goals of a model and questions it will be expected to answer before selecting the tools and constructing the model. Furthermore, nonuniqueness in modeling, the concept that different inputs can provide the same results, can confound modeling outcomes. This can be at least partially mitigated through a strong CSM and modeling implementation by experienced experts. Models that are improperly constructed can provide incorrect understanding and/or predictions, and as a result models should only be proposed and undertaken by or under the supervision of experienced and knowledgeable practitioners. Known limitations must be documented clearly to manage expectations.

A.3 Calculations

The following subsections provide guidance and examples for common calculations used for performance evaluation of P&T systems.

A.3.1 Operating Cost versus Concentration

As part of the performance assessment, information about the P&T design, operation, and cost are important to consider when assessing whether optimization would help performance or when comparing P&T to other remediation alternatives ( Truex et al. 2015[VKN225ST] Truex, M.J., C.D. Johnson, D.J. Becker, M.H. Lee, and M.J. Nimmons. 2015. “Performance Assessment for Pump and Treat Closure or Transition.” Pacific Northwest National Laboratory. https://www.pnnl.gov/main/publications/external/technical_reports/PNNL-24696.pdf. ). Lifetime costs for O&M of P&T systems are high when slow back diffusion of contaminants is encountered, which can result in very long time frames to meet endpoints ( FRTR 2020[M6TQYWH3] FRTR. 2020. “Technology Screening Matrix: Groundwater Pump and Treat.” Federal Remediation Technologies Roundtable. https://frtr.gov/matrix/Groundwater-Pump-and-Treat/. ). One method of cost evaluation is to calculate total operational cost for a given time period, the amount of water extracted, and total mass removal using the concentrations found in the extracted water. Three methods to determine mass removal can be found in USGS Mass of Chlorinated Volatile Organic Compounds Removed by Pump-and-Treat, Naval Air Warfare Center, West Trenton, New Jersey, 1996–2010 ( Lacombe 2011[642KC9FX] Lacombe, P.J. 2011. “Mass of Chlorinated Volatile Organic Compounds Removed by Pump-and-Treat, Naval Air Warfare Center, West Trenton, New Jersey.” U.S. Geological Survey. https://pubs.usgs.gov/sir/2011/5003/support/sir20115003.pdf. ). The data can then be used to determine a cost per unit mass of contaminants removed. Time-series plots could then be used to evaluate the cost per unit mass removed over time. Increasing costs may be directly related to economic factors (e.g., increasing power rates or inflation), changes could also be indicative of performance issues such as reaching asymptotic recovery, increasing biofouling, increasing mechanical issues, etc. An evaluator would need to use multiple lines of evidence to determine the reason for a substantial unit cost increase over a short period of time.

A.3.2 Specific Capacity of a Well

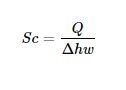

The specific capacity of a well is used to express the well productivity. Specific Capacity (Sc) is defined as

where Q is the pumping rate and Δhw is the drawdown in the well, defined as

where Δh is the drawdown in hydraulic head in the aquifer at the well screen boundary and ΔhL is the well loss created by the turbulent flow of water through the screen and into the pump intake (usually not significant in P&T systems unless they pump at high rates), as shown in Figure A-1 ( Freeze and Cherry 1979[8ZII3SD5] Freeze, R.A., and J.A. Cherry. 1979. Groundwater. Englewood Cliffs, New Jersey 07632: Prentice-Hall, Inc. https://www.un-igrac.org/sites/default/files/resources/files/Groundwater%20book%20-%20English.pdf. ). Well loss (ΔhL) can be estimated by methods outlined in Walton ( Walton 1970[6JS5NT6T] Walton, W.C. 1970. Groundwater Resource Evaluation. New York, NY: McGraw Hill Book Co. https://scirp.org/reference/ReferencesPapers.aspx?ReferenceID=1651823. ), Campbell and Lehr ( Campbell and Lehr 1973[QJ5DLISW] Campbell, M.D., and J.H. Lehr. 1973. Water Well Technology. New York, NY: McGraw-Hill. ), and Sterrett and colleagues ( Sterrett 2007[NE7FX9SE] Sterrett, Robert J. 2007. Groundwater & Wells. New Brighton, MN: Johnson Screens. ).

Figure A-1. Example of calculation of specific capacity of a well.

Source: Ricardo Jaimes, Washington, D.C., Department of Energy and Environment. Used with Permission. (

Freeze and Cherry 1979[8ZII3SD5] Freeze, R.A., and J.A. Cherry. 1979. Groundwater. Englewood Cliffs, New Jersey 07632: Prentice-Hall, Inc. https://www.un-igrac.org/sites/default/files/resources/files/Groundwater%20book%20-%20English.pdf.

)

Specific capacity is a quantity that a water well can produce per unit of drawdown. It is often obtained from a step-drawdown test.

A step-drawdown test used to obtain the specific capacity test assumes the following:

- The well is pumped at several constant rates long enough to establish equilibrium drawdown for each.

- Drawdown within the well is a combination of the decrease in hydraulic head (pressure) within the aquifer and a pressure loss due to turbulent flow within the well.

In a step-drawdown test, the well is pumped at several successively higher pumping rates and the drawdown for each rate or step is recorded. Usually four to six pumping steps are used, each lasting approximately 1 hour. The data from a step test can be used to calculate the specific capacity of the well at various discharge rates. The information of the test can also be used to select optimum discharge rates and can be used to calculate the relative proportion of laminar and turbulent flow occurring at selected pumping rates; this is the percentage of total head loss attributable to turbulent flow.

The data requirements for the specific capacity test are as follows:

- pumping well geometry

- drawdown vs. discharge rate data for the pumping well

During the operation of a P&T system Sc can be measured periodically in extraction wells to evaluate their performance. This can be done using the described step-drawdown test, which also helps assess well efficiency. Periodic Sc testing that indicates a loss of production could trigger well redevelopment.

One practical way of tracking well performance specific capacity is the following simplified method for evaluating changes in specific capacity during the operation of the P&T system. This method sets a protocol that is used consistently for each extraction well over time. The steps are listed below, in order:

- Measure pumping water level.

- Then turn off the extraction well and monitor water-level recovery (this does not need to be a large data set but can be obtained from the treatment system if automated water levels are part of operational monitoring) to get an understanding of the approximate nonpumping water level. The time required depends on the hydraulic conductivity of the units in which the well screen is installed but can be simply a defined standard time, which might be as little as 1 hour or as long as 1 day.

- When the standard nonpumping time has been reached, measure the water level in the extraction well (this will be the near nonpumping water level / static level).

- After this water level is obtained, restart the extraction well at the standard test flow (this might be the design flow rate or some percentage of design flow rate and can vary from test to test to some degree +/- 20%) and operate it for the standard test time (e.g., 30 minutes) and obtain the pumping water level at that time. The specific capacity at the standard time is simply the standard test flow rate divided by the drawdown (approximate nonpumping water level versus water level at standard test time).

A.3.3 Hydraulic Gradients and Groundwater Flow Directions

The following subsections discuss methods for determining hydraulic gradients and groundwater flow directions.

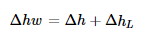

A.3.3.1 Vertical Hydraulic Gradients

The hydraulic gradient is the change in head over a distance (magnitude) in the direction that represents the maximum rate of head decline (direction).

Evaluating vertical gradients uses wells or piezometers extending to different depths in the same aquifer that have been completed immediately adjacent to each other (in clusters or nests) or wells that have screens that are vertically isolated within the same borehole (nested) or wells in separate boreholes (a cluster). The magnitude of the vertical gradient is calculated by comparing the absolute value of the head difference between the piezometers in the direction of flow, Δh = |hB – hA| , over the absolute value of the vertical distance separating their measurement locations, ΔL = |ZB – ZA| ( Woessner and Poeter 2020[B3W39RRS] Woessner, William W, and Eileen P. Poeter. 2020. Hydrogeologic Properties of Earth Materials and Principles of Groundwater Flow. The Groundwater Project. https://gw-project.org/books/hydrogeologic-properties-of-earth-materials-and-principles-of-groundwater-flow/. ). If piezometers or monitoring wells have screens open to the groundwater over an interval, the measuring point is designated as the midpoint of the saturated portion of the screened interval. The direction of the gradient is determined by comparing the head in the shallow well, A, with that of the deeper well, B. In Figure A-2, the head at B is less than the head at A, so flow is downward. If the head in B were higher than A, the gradient would be upward.

Figure A-2. Example of calculation of vertical hydraulic gradient.

Source: Ricardo Jaimes, Washington, D.C., Department of Energy and Environment. Used with Permission.

The most practical way to calculate the vertical hydraulic gradient is to have installed a piezometer nest/cluster in the same or adjacent aquifer units.

The USEPA has an online vertical gradient calculator to calculate the vertical hydraulic gradient between two near wells or piezometers located in the same aquifer; it can found at the following link: https://www3.epa.gov/ceampubl/learn2model/part-two/onsite/vgradient02.html.

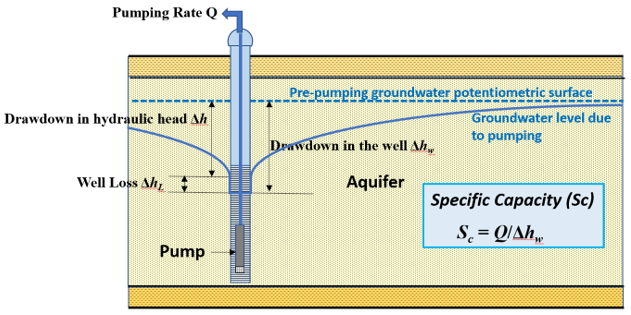

A.3.3.2 Horizontal Hydraulic Gradients and Groundwater Flow Directions

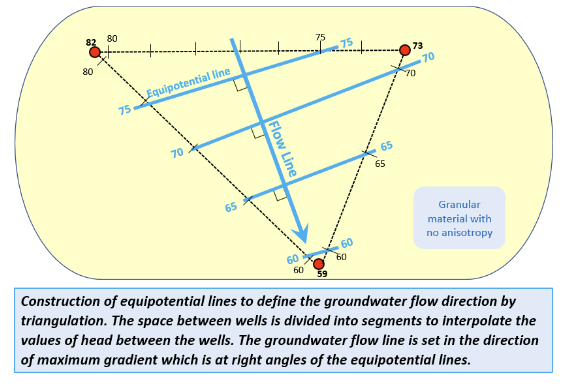

To determine the groundwater flow direction, the first step is to plot the head data on a map or cross section, then create contours of equal head (i.e., equipotential lines). The general process used to construct equipotential lines is illustrated on Figure A-3.

Figure A-3. Example of determination of groundwater flow direction.

Source: Ricardo Jaimes, Washington, D.C., Department of Energy and Environment. Used with Permission.

Alternatively, equipotential lines can be drawn using computer software to interpolate sparse data points to a regular grid (e.g., ArcGIS or the public domain open-source geographical information system QGIS, the gridding and contouring program Surfer, or codes in Python or any other programming language). These interpolated values are then contoured. There are many interpolation methods (e.g., kriging, nearest neighbor, triangulation, inverse-distance weighting of surrounding data, etc.). The problem with automated interpolation is that the boundary conditions of the data may be poorly represented because the programs can infer data in some cases by extrapolation beyond the measured data field. One approach is to initiate contouring using the automated interpolation program and have a professional with knowledge of the CSM and contouring methods use the output and make edits or manual adjustments using professional judgment to more effectively prepare contours that the software cannot account for. In certain cases, some professionals prefer to use digitized versions of hand-drawn contour maps instead of the computer-generated versions because this allows hydrogeologic insights to be incorporated into the interpretation and often allows the boundary conditions to be represented in a more realistic manner. With any method of interpolation and contouring, results must be reviewed carefully to see whether they make hydrogeologic sense. When the output does not make sense, data quality and interpolation methods need to be reviewed, and in some cases additional field data collection must be conducted.

A.3.4 Capture Zone Analysis

A capture zone is the region that contributes the groundwater extracted by the extraction well(s). It is a function of the drawdown due to pumping and the background hydraulic gradient. If the water table is flat, the capture zone will be circular and will correspond to the cone of depression; however, in practice the water table is generally sloping, so the capture zone and the cone of depression will not correspond. The capture zone will be an elongated area that extends slightly downgradient of the pumping well and extends in an upgradient direction. Capture zones are controlled by the time that it takes groundwater to flow from an upgradient area to the pumping well. If sufficient pumping time elapses, the capture zone will eventually extend upgradient to the closest groundwater divide ( Fetter 2001[ETPKWSA5] Fetter, C.W. 2001. Applied Hydrogeology. 4th ed. Prentice Hall. ).

According to USEPA ( USEPA 2008[7ILB4T4V] USEPA. 2008. A Systematic Approach for Evaluation of Capture Zones at Pump and Treat Systems. Ada, OK: U.S. Environmental Protection Agency, National Risk Management Research Laboratory. https://cfpub.epa.gov/si/si_public_record_report.cfm?Lab=NRMRL&dirEntryId=187788. ), capture zone analysis is the process of evaluating field observations of hydraulic heads and groundwater chemistry to interpret the actual capture zone and then comparing the interpreted capture zone to a target capture zone to determine whether capture is sufficient. When evaluating capture zones, the USEPA document, A Systematic Approach for Evaluation of Capture Zones at Pump and Treat Systems, is recommended as companion guidance to use with existing guidance in USEPA’s Methods for Monitoring Pump-and Treat Performance ( USEPA 1994[NSKP8SN6] USEPA. 1994. “Methods for Monitoring Pump-and-Treat Performance.” https://semspub.epa.gov/work/HQ/174486.pdf. ).

The document presents a systematic approach of six steps to evaluating capture zones at P&T sites, which are summarized below:

- Step 1: Review site data, site conceptual model, and remedy objectives.

- Step 2: Define site-specific target capture zone(s).

- Step 3: Interpret water levels.

- Potentiometric surface maps (horizontal) and water-level difference maps (vertical)

- Water-level pairs (gradient control points)

- Step 4: Perform calculations.

- Estimated flow-rate calculation

- Capture zone width calculation (can include drawdown calculation)

- Modeling (analytical or numerical) to simulate water levels, in conjunction with particle tracking and/or transport modeling

- Step 5: Evaluate concentration trends.

- Step 6:

- Interpret actual capture based on Steps 1–5, compare to target capture zone(s), and assess uncertainties and data gaps. Specific techniques to assess the extent of capture achieved by the extraction wells are applied in Steps 3–5. Each of these techniques is subject to limitations, and in most cases no single line of evidence will conclusively differentiate between successful and failed capture. Therefore, developing converging lines of evidence by applying multiple techniques to evaluate capture increases confidence in the conclusions of the capture zone analysis.

The full document, including the detailed description of the development of the six steps for capture zone analysis summarized above, can be found at the following link: https://nepis.epa.gov/Exe/ZyPDF.cgi/60000MYO.PDF?Dockey=60000MYO.PDF.

A.3.5 Discharge Limit Calculations

A discharge limit is the maximum concentration level at which specific pollutants are allowed to be discharged. These limits are established through a rule, permit, or order ( “Law Insider,” n.d.[G7XRKJSK] “Law Insider.” n.d. https://www.lawinsider.com/dictionary/maximum-concentration-level. ). P&T systems will typically have a discharge to a POTW, surface water, or groundwater. Each one of these receiving waters may have discharge limits, which fall under different regulatory programs and have different methods of determining limits.

For discharges to a POTW, the local municipality performs permitting, administrative, and enforcement tasks as approved by USEPA and authorized state pretreatment programs under the national pretreatment program. There are three types of national pretreatment requirements: prohibited discharge standards that include general and specific prohibitions, categorical pretreatment standards, and local limits ( USEPA 2011[RV64WJRR] USEPA. 2011. “Introduction to the National Pretreatment Program.” U.S. Environmental Protection Agency, Office of Wastewater Management. https://www.epa.gov/sites/default/files/2015-10/documents/pretreatment_program_intro_2011.pdf. ). Additional information on the determination of pretreatment limits can be found at https://www.epa.gov/npdes/national-pretreatment-program.

For surface water discharges under the NPDES, discharge limits are synonymous with effluent limitations. When developing effluent limitations for an NPDES permit, a permit writer must consider limits based on both the technology available to control the pollutant and limits that are protective of the water quality standards of the receiving stream ( USEPA 2022[M5ZNVDIJ] USEPA. 2022. “National Pollutant Discharge Elimination System (NPDES).” September 2022. https://www.epa.gov/npdes. ). Additional information on the determination of effluent limitations can be found at https://www.epa.gov/npdes.

For groundwater injection or recirculation, the underground injection control program implemented by USEPA and primacy states focuses on protection of drinking water. Aquifer remediation wells are subject to the underground injection control regulations as either Class IV or Class V wells, The difference between Class IV and Class V wells is the fluid being injected. Class IV wells are used to inject hazardous or radioactive fluids. Class V wells may inject only nonhazardous fluids (see EPA’s Class IV well guidance for more information). Class IV wells are generally prohibited; they are allowed to inject treated groundwater back into the same formation from which it was drawn, if approved under CERCLA or RCRA ( USEPA 1999[DZ6GIAH4] USEPA. 1999. “The Class V Underground Injection Control Study, Volume 16: Aquifer Remediation Wells.” U.S. Environmental Protection Agency, Office of Ground Water and Drinking Water. https://www.epa.gov/uic/class-v-underground-injection-control-study. ). Environmental consultants, owners, and operators of aquifer remediation–related injection are required to notify USEPA or the primacy state and receive approval of or rule authorization for the injection wells, which is contingent on operator compliance with all applicable federal, state, and local requirements ( USEPA 2022[HCXHR83F] USEPA. 2022. “Aquifer Remediation Related Shallow Injection Wells.” https://www.epa.gov/uic/aquifer-remediation-related-shallow-injection-wells. ). Additional information on Class V well requirements can be found at https://www.epa.gov/uic. Additional state and local program requirements may also impact the discharge limit for groundwater.

A.3.6 Concentration versus Time

During the course of the P&T operations, it is critical to monitor COC concentration over time to determine whether the P&T system remediation is progressing as expected. Time-series plots, with either the date or elapsed time on the horizontal axis and the concentration on the vertical axis, are simple representations for monitoring COC concentrations. The ITRC document Groundwater Statistics for Monitoring and Compliance (GSMC-1) has documentation on the proper procedures for the construction and limitation of time series in Section 5.1 ( ITRC 2013[4DY4TN8V] ITRC. 2013. “Groundwater Statistics and Monitoring Compliance.” Washington, D.C.: Interstate Technology & Regulatory Council, Groundwater Statistics and Monitoring Compliance Team. https://projects.itrcweb.org/gsmc-1/. ).

A.3.6.1 Trend Analysis

Temporal trends (i.e., changes over time) evaluate the rate of change of contaminant concentrations at each location and can be used for simple predictions of future concentrations with an inherent assumption that conditions affecting concentration do not change. Concentrations trends are commonly classified into three categories:

- Increasing—where contaminant concentrations continue to increase over time.

- Decreasing—where contaminant concentrations continue to decrease over time.

- Stable—where contaminant concentrations remain relatively constant over time.

The simple linear regressions consist of one independent variable (time) and one dependent variable (concentration). Many methods can be used to estimate temporal trends in sampled concentrations. Typically, temporal trend methodologies can be classified into two main categories: (1) univariate methods and (2) multivariate methods.

A.3.6.1.1 Univariate Trend Analysis Methods

Univariate trend analysis methods evaluate the sampled concentrations against a single variable (i.e., time):

where y is the sampled concentration, t is time, β0 and β1 are assumed to be constant and are estimated based on the regression method, and ε is the error.

When data obtained during different seasons or other cyclical periods exhibit significantly different concentrations, seasonal trend tests are often used ( Helsel et al. 2020[EMAX568C] Helsel, Dennis R., Robert M. Hirsch, Karen R. Ryberg, Stacey A. Archfield, and Edward J. Gilroy. 2020. “Statistical Methods in Water Resources.” Report 4-A3. Techniques and Methods. Reston, VA. USGS Publications Warehouse. https://doi.org/10.3133/tm4A3. ). These tests are conducted by performing trend analysis on a subset of the available data, for instance using only data collected in the spring, and require that data be collected during the same period each year at each monitoring point. Such pragmatic approaches have at least two undesirable consequences. First, limiting the trend analysis data set to (seasonal) subsets can greatly reduce the amount of data and thereby make it more difficult to detect trends or estimate them precisely. Second, if site-specific data and subject-matter experts know of a probable cause for such cyclical or repeating patterns that could potentially be quantified and used to help understand, explain, and predict concentration changes, then this apparent pragmatism prevents thorough data exploration.

Ordinary Least Squares (OLS)

Ordinary least squares (OLS) is the most common linear regression method ( Legendre 1806[ALMJZIMP] Legendre, Adrien M. 1806. Nouvelles Méthodes Pour La Détermination Des Orbites Des Comètes; Avec Un Supplément Contenant Divers Perfectionnemens de Ces Méthodes et Leur Application Aux Deux Comètes de 1805. Paris, France: Firmin Didot. ). Estimates of the trend parameters—typically, the rate of change with time (i.e., the slope) and an intercept—are obtained by minimizing the sum of squared differences between the sample data and their fitted values. OLS assumes that underlying errors are independent of the explanatory variables, they follow a distribution that is approximately normal with zero mean, and their variances are constant over time (i.e., homoscedastic). These assumptions are important for deciding when the slope of the linear regression is statistically significant (i.e., significantly different from zero).

OLS also requires that all values of the sampled concentration be quantified, which rules out its direct application to data sets with nondetects or other “less than” values. Many groundwater monitoring programs, especially those where groundwater concentrations are at low levels, produce nondetect results. Nondetects are left-censored data: they provide information about a possible range of values—namely, all those values that are less than the laboratory reporting limit. Substitution methods (i.e., replacing the censored data with a predetermined value, commonly half of the laboratory reporting limit) can impart significant bias to parameter estimates ( USEPA 2009[JN7FXYXM] USEPA. 2009. “Statistical Analysis of Groundwater Monitoring Data at RCRA Facilities.” U.S. Environmental Protection Agency, Office of Resource Conservation and Recovery. https://archive.epa.gov/epawaste/hazard/web/pdf/unified-guid.pdf.Helsel 2005[49GL74SR] Helsel, Dennis R. 2005. “More Than Obvious: Better Methods for Interpreting Nondetect Data.” Environmental Science & Technology 39 (20): 419A-423A. https://doi.org/10.1021/es053368a.Helsel and Gilliom 1986[TIRSWKEI] Helsel, Dennis R., and Robert J. Gilliom. 1986. “Estimation of Distributional Parameters for Censored Trace Level Water Quality Data: 2. Verification and Applications.” Water Resources Research 22 (2): 147–55. https://doi.org/10.1029/WR022i002p00147.Gilliom and Helsel 1986[Z4X4Q4WK] Gilliom, Robert H., and Dennis R. Helsel. 1986. Estimation of Distributional Parameters for Censored Trace Level Water Quality Data: 1. Estimation Techniques. Vol. 22. https://agupubs.onlinelibrary.wiley.com/doi/abs/10.1029/WR022i002p00135. ). The occurrence of differing laboratory reporting limits, which is common in long-term data sets due to either advances in analytical methods or sample dilution, further complicates the selection of a substitution value. Consequently, OLS is often unsuitable for trend analysis in the presence of nondetects.

Mann-Kendall Trend Test

The Mann-Kendall is a nonparametric test that evaluates monotonic (or singular) trends by scoring a data set based on whether sequential measurements are higher (+1), lower (-1), or the same (0) ( Mann 1945[3AUM7YE3] Mann, H.B. 1945. “Nonparametric Tests against Trend.” Econometrica. http://dx.doi.org/10.2307/1907187. ). The significance of a trend is determined by comparing the standardized Z-statistic for the trend to a critical point (determined by the level of significance, α, based on the percentiles of the standard normal distribution):

where Z is the Z-statistic, S is the Mann-Kendall statistic and SD[S] is the standard deviation of the Mann-Kendall statistic. When the value of S is positive and the Z-statistic is higher than the critical point, then a statistically significant increasing trend is present. When the value of S is negative and the Z-statistic is greater than the critical point, then a statistically significant decreasing trend is present. When the Z-statistic is less than the critical point, then no statistically significant trend is present, and the concentration is considered stable.

The Mann-Kendall test does not estimate the slope; for that purpose, it is often paired with the Theil-Sen slope estimate, which determines the median of all pairwise slopes ( Gilbert 1987[55X9RYWD] Gilbert, Richard O. 1987. “Statatistical Methods for Environmental Pollution Monitoring.” https://www.osti.gov/servlets/purl/7037501. ). The Akritas-Theil-Sen method extends the Theil-Sen method to data sets with multiple censoring levels ( Helsel 2012[62Z3IQJU] Helsel, Dennis R. 2012. Statistics for Censored Environmental Data Using Minitab and R. 2nd ed. Hoboken, N.J: Wiley. ).

Local Regression

Temporal local regression analysis, also known as spline smoothing, combines linear and nonlinear regression methods to fit complex and/or seasonally impacted temporal trends. This method estimates the concentration at a given point in time as a weighted average of its nearest neighbors. Smoothing methods provide simple model fits to localized subsets of the data to build a function that describes the variation in the data point by point as opposed to a global function that fits all of the data at once (e.g., linear regression). This method cannot be used to predict concentrations.

A.3.6.1.2 Multivariate Trend Analysis Methods

Multivariate trend analysis methods evaluate the sampled concentrations against multiple independent variables, such as streamflow or water-level changes or precipitation ( Helsel et al. 2020[EMAX568C] Helsel, Dennis R., Robert M. Hirsch, Karen R. Ryberg, Stacey A. Archfield, and Edward J. Gilroy. 2020. “Statistical Methods in Water Resources.” Report 4-A3. Techniques and Methods. Reston, VA. USGS Publications Warehouse. https://doi.org/10.3133/tm4A3. ):

Where X1, … Xp are independent variables that may explain a substantial part of the observed variance in sampled concentrations, β2 … βp are assumed to be constant and are estimated based on the regression method, and εi are the errors.

Two types of independent variables can be incorporated into multiple regression analysis: predictable and random. Predictable covariates are those for which the future values are known—for example, time. Random covariates are those that have not been observed during the time interval [t0, t1] and must, therefore, be predicted or assumed. Covariates that vary over time, such as water level or river stage, may be considered predictable or random, depending on whether they have been observed throughout the target interval [t0, t1].

In multiple regression analysis, one goal is to strike a balance between underfitting where some variables that can explain the variability in the response variable are missing from the regression model, and overfitting where some of the random error is mistakenly represented by one or more covariates in the regression model. Underfitting a model can increase the confidence intervals of the regression parameters and bias their estimates, and overfitting can lead to an overly optimistic assessment of precision and confound sources of variability (such as laboratory analytical error) with the natural phenomena of interest. Several tests can be performed to help select the most appropriate regression model, such as the Bayesian information criterion and the likelihood ratio test.

Ordinary Least Squares (OLS)

In the absence of nondetects, OLS can be used for multiple regression analysis. The approach is the same as for univariate trend analysis, except that additional independent variables are added that may explain a substantial part of the observed variance in sampled concentrations.

Maximum Likelihood Estimation (MLE)

Maximum likelihood estimation (MLE) methods, such as the Tobit method, have long been applied to environmental data sets containing censored response variables ( DiFilippo et al. 2019[QMBJ8Z3P] DiFilippo, Erica, Matt Tonkin, Alex Spiliotopoulos, William Huber, and Virginia Rohay. 2019. “Evaluating Environmental Remediation Performance at Radwaste Sites Using Multiple, Censored Regression Analysis - 19348.” In Report Number: INIS-US-21-WM-19348. Research Org.: WM Symposia, Inc., PO Box 27646, 85285-7646 Tempe, AZ (United States). https://www.osti.gov/biblio/23003080.Haslauer et al. 2017[64GMPQE2] Haslauer, Claus P., Jessica R. Meyer, András Bárdossy, and Beth L. Parker. 2017. “Estimating a Representative Value and Proportion of True Zeros for Censored Analytical Data with Applications to Contaminated Site Assessment.” Environmental Science & Technology 51 (13): 7502–10. https://doi.org/10.1021/acs.est.6b05385.Liu et al. 1997[TJZIMPLH] Liu, S., J.-C. Lu, D.W. Kolpin, and W.Q. Meeker. 1997. “Analysis of Environmental Data with Censored Observations.” Environmental Science & Technology 31 (12): 3358–62. https://doi.org/10.1021/es960695x.Helsel 1990[F3NR76UG] Helsel, Dennis R. 1990. “Less Than Obvious - Statistical Treatment of Data Below the Detection Limit.” Environmental Science & Technology 24 (12): 1766–74. https://doi.org/10.1021/es00082a001.Cohn 1988[VBMRTMBF] Cohn, Timothy A. 1988. “Adjusted Maximum Likelihood Estimation of the Moments of Lognormal Populations from Type 1 Censored Samples.” Report 88–350. Open-File Report. USGS Publications Warehouse. https://doi.org/10.3133/ofr88350.Cohen 1950[QJCDESH2] Cohen, Arthur. 1950. “Estimating the Mean and Variance of Normal Populations from Singly Truncated and Doubly Truncated Samples.” Annals of Mathematical Statistics 21: 557–69.Helsel et al. 2020[EMAX568C] Helsel, Dennis R., Robert M. Hirsch, Karen R. Ryberg, Stacey A. Archfield, and Edward J. Gilroy. 2020. “Statistical Methods in Water Resources.” Report 4-A3. Techniques and Methods. Reston, VA. USGS Publications Warehouse. https://doi.org/10.3133/tm4A3.Helsel 2005[49GL74SR] Helsel, Dennis R. 2005. “More Than Obvious: Better Methods for Interpreting Nondetect Data.” Environmental Science & Technology 39 (20): 419A-423A. https://doi.org/10.1021/es053368a. ). MLE methods estimate regression parameters so that the model provides the most probable estimate of the observed data by maximizing a likelihood function. Similar to OLS, this method assumes that underlying errors are independent of the explanatory variables, they follow a distribution that is approximately normal with zero mean, and their variances are constant over time (i.e., homoscedastic).

Detrending

Detrending involves removing the influence of covariates, such as river stage, from the response variable before conducting a univariate trend analysis. Several methods are available for characterizing the influence of the covariate, such as OLS, loess smoothing ( Helsel et al. 2020[EMAX568C] Helsel, Dennis R., Robert M. Hirsch, Karen R. Ryberg, Stacey A. Archfield, and Edward J. Gilroy. 2020. “Statistical Methods in Water Resources.” Report 4-A3. Techniques and Methods. Reston, VA. USGS Publications Warehouse. https://doi.org/10.3133/tm4A3. ), or development of response functions ( Spane and Mackley 2011[J2NED6U9] Spane, Frank A., and Rob D. Mackley. 2011. “Removal of River-Stage Fluctuations from Well Response Using Multiple Regression.” Ground Water 49 (6): 794–807. https://doi.org/10.1111/j.1745-6584.2010.00780.x. ). Univariate trend analysis is then performed on the residuals of the relationship between the covariate and the response variable.

A.3.6.1.3 Data Transformations

It is often appropriate to analyze the natural logarithms of the responses to make the data more nearly normal and improve the fit and applicability of the OLS or MLE regression, especially when the responses are chemical concentrations ( Helsel et al. 2020[EMAX568C] Helsel, Dennis R., Robert M. Hirsch, Karen R. Ryberg, Stacey A. Archfield, and Edward J. Gilroy. 2020. “Statistical Methods in Water Resources.” Report 4-A3. Techniques and Methods. Reston, VA. USGS Publications Warehouse. https://doi.org/10.3133/tm4A3.Gilbert 1987[55X9RYWD] Gilbert, Richard O. 1987. “Statatistical Methods for Environmental Pollution Monitoring.” https://www.osti.gov/servlets/purl/7037501. ):

where ci is the representative measurement of water quality (e.g., concentration).

For nonparametric trend methods, such as the Mann-Kendall trend test, the resulting trend determination will be the same regardless of transformation. For parametric methods such as OLS and MLE, it is important to evaluate the impact data transformations may have on final trend results.

A.3.6.2 Mass Removal Rate

Mass removal rates are a particularly important and practical performance metric to determine the effectiveness of the P&T system or any other active technology the P&T system may have been transitioned to. As a P&T system is operated, concentration versus time trends and mass removal rates versus time trends are monitored to continually (i.e., using tables or graphics) evaluate the performance of pumping wells and the treatment system. Mass removal rates are best analyzed by converting concentrations to molar mass and adding the molar mass of COCs for the respective class of compounds.

Initially, experience and lessons learned have taught us that in the initial stages of operation of the treatment system, molar mass increases rapidly with time as the high concentrations in the source area and dissolved plume are captured and treated by the pumping system. As the number of pore volumes extracted from the aquifer increases and are treated, it is common to see tapering of the mass removal rates from individual wells and the total treatment system. For example, tapering of molar mass is first observed in distal or downgradient dissolved plume wells that are within the capture zone of the P&T system. Tapering in the source area pumping wells is much slower because mass may have been absorbed into the soil matrix or is present in dead-end bedrock fractures. In these conditions the COCs slowly desorb or back diffuse into the aquifer or are flushed out from bedrock fractures.

As these concentrations continue to decrease, the mass removal rates gradually reduce to asymptotic levels. Asymptotic levels are defined as the progressively slower rate of removal of COC concentration and mass from the aquifer for similar operating conditions (i.e., pumping rates, capture zone, operational uptime) compared with initial stages of the P&T system.

When asymptotic conditions are identified, the engineer should perform the following: (1) evaluate the cost effectiveness and value of operating the P&T system, (2) determine whether optimization of the P&T system is needed (e.g., shut off low-concentration wells in the source area or dissolved distal plume), or (3) evaluate the need to transition to alternative remedial technologies to continue the remedial action and treat areas with asymptotic concentration.

A.3.6.3 Point Degradation Rate

In general, there are three first-order attenuation rate constants of interest at environmentally impacted sites: (1) concentration versus time (known as the point attenuation rate or kpoint), (2) concentration versus distance, and (3) biodegradation ( USEPA 2002[4ZTZFUU8] USEPA. 2002. “Ground Water Issue: Calculation And Use Of First-Order Rate Constants For Monitored Natural Attenuation Studies.” https://nepis.epa.gov/Exe/ZyPURL.cgi?Dockey=10004674.txt. ). The point attenuation rate is a first-order decay constant defined as:

where C is the concentration at time t, C0 is the initial concentration (at t = 0) and e is Euler’s number. When concentrations are expressed in natural logarithms, the time parameter β1 in Equation A-3 is the point attenuation rate ( Wilson 2011[W3X6YAYS] Wilson, J.T. 2011. “An Approach for Evaluating the Progress of Natural Attenuation in Groundwater.” https://cfpub.epa.gov/si/si_public_record_report.cfm?Lab=NRMRL&dirEntryId=239868. ). USEPA guidance documents recommend that point attenuation rates be calculated only after a minimum of one order of magnitude decrease in concentration has been observed ( USEPA 1999[R85TDFJZ] USEPA. 1999. “Use of Monitored Natural Attenuation at Superfund, RCRA Corrective Action, and Underground Storage Tank Sites.” Office of Solid Waste and Emergency Response. https://www.epa.gov/sites/default/files/2014-02/documents/d9200.4-17.pdf. ).

A.3.6.4 Time to Cleanup / Restoration Time Frame

The cleanup time frame can be estimated by many methods, including modeling and trend analysis, or based on the point attenuation rate. The results from time to cleanup estimations should be viewed cautiously, and any decisions made based on these estimates need to acknowledge the various assumptions, such as assumed future conditions and lumping of processes/reactions, that underpin these estimations.

A.3.7 Rebound Testing

The phenomenon of rebound is commonly observed at P&T and other remedial action sites, even after remedial goals, regulatory groundwater criteria, or asymptotic groundwater concentrations are reached during the active operation of the treatment system. Prior to termination or transition of a P&T system to a more cost-effective remedial option, a shutdown evaluation is performed to determine whether desired conditions and/or RAOs have been achieved. As part of this process, a rebound test may be performed along with other evaluations such as modeling or field sampling.

For P&T systems, rebound is the rapid increase in contaminant concentration that can occur after pumping has been discontinued. This increase may be followed by stabilization of the contaminant concentration at a lower level than baseline concentrations. The effects of rebound on remediation efforts present two main difficulties for groundwater restoration: (1) longer treatment times and (2) residual concentrations above the cleanup standard. The factors that affect rebound are physical and chemical characteristics of the contaminant being treated, subsurface solids, and the groundwater quality and geochemistry.

Rebound testing should always be conducted before a P&T system is terminated or transitioned to an alternate active or passive remedial technology. Rebound testing can be completed in a phased approach and may include the following: (1) shutdown of individual pumping wells in a capture zone treatment area where monitoring wells have reached the groundwater criteria for a period of time (e.g., 4 quarters) or (2) shutdown of the full-scale system because the groundwater criteria in monitoring wells was reached or asymptotic concentrations and mass removal rates in pumping wells were reached.

During the temporary shutdown period, the P&T system is usually cleaned, serviced, and retained in a state of readiness in case the concentrations in monitoring wells increase. The rebound or increase in concentration after the P&T system is shut down may be a function of several factors (e.g., presence of NAPL, low-permeability soils that contribute to back diffusion, groundwater velocity, improper location of pumping wells). These factors should be considered when designing the rebound test and rebound concentration for restart of a P&T system. In addition, this test procedure should be discussed with the regulator before the rebound test is implemented, and the rebound testing plan should be flexible and adaptive to accommodate changes in the observed rebound trends.

The restart rebound concentrations can be a percentage of the baseline concentrations (e.g., 10% to 25%), asymptotic concentration(s), or groundwater criteria (if it was previously attained). Typical time periods for rebound testing may range from 12 to 24 months provided the groundwater flow is within the capture zone of the full-scale treatment system. It is important to provide an adequate time for rebound testing to ensure equilibrium conditions are reestablished at the site and the period considers one or more seasonal changes. If the concentration in specific wells rebounds to the agreed groundwater concentrations, a decision will need to be made to resume pumping at the well only or to leave it off-line and evaluate the mass discharge from monitoring wells in the treatment area.

A.3.8 Spatial Moments Analysis

Spatial moments analysis is a Lagrangian approach for evaluating contaminant mass, and it monitors contaminant mass through both space and, if conducted at several different time periods, time. The first three spatial moments (zeroth, first and second) assess the dissolved phase mass, solute velocity, and dispersion of the contaminant plume ( Govindaraju and Das 2007[FZUE7A3U] Govindaraju, Rao S., and Bhabani S. Das. 2007. “Moment Analysis for Subsurface Storm Flow.” In Moment Analysis For Subsurface Hydrologic Applications, edited by Rao S. Govindaraju and Bhabani S. Das, 247–63. Dordrecht: Springer Netherlands. https://doi.org/10.1007/978-1-4020-5752-6_11. ). Several methods for evaluating spatial moments in 2D are presented below. Moments analysis in 3D uses similar methods but is more complex and beyond the scope of this document.

A.3.8.1 Zeroth Moment (Plume Mass Estimates)

The zeroth moment is an integrated measure of the mass within a spatial extent at a specific snapshot in time. The change in the mass of the plume with time can be evaluated by comparing the zeroth moment at several temporal snapshots.

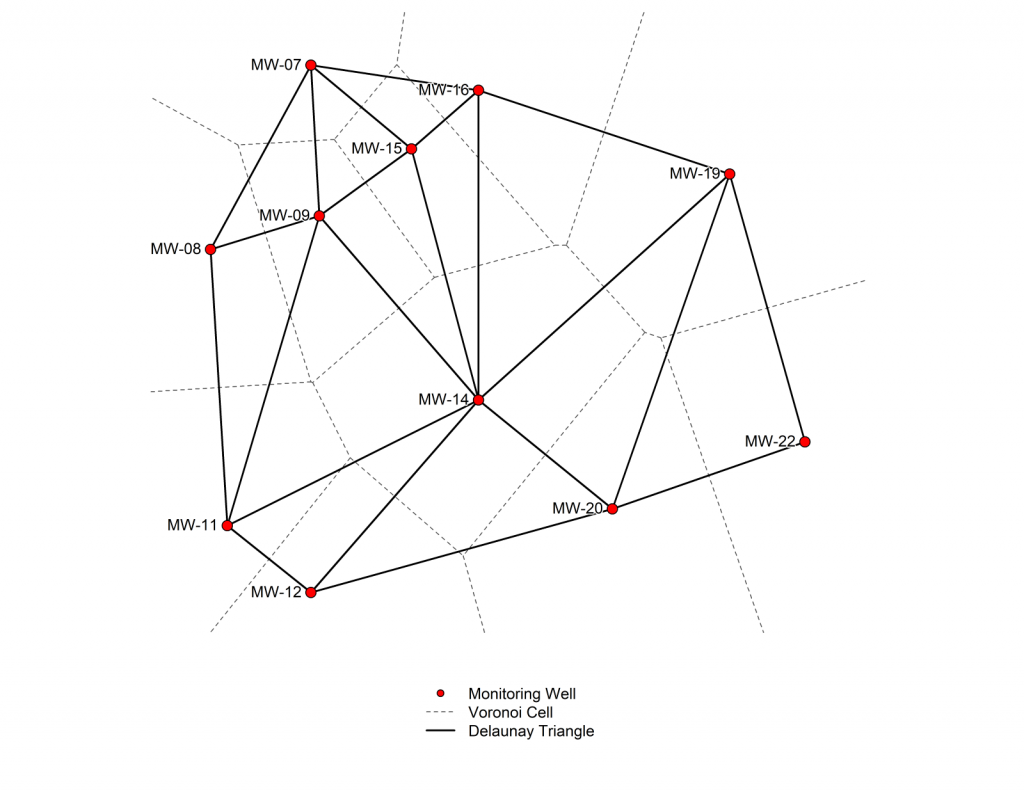

Delaunay triangulation / Voronoi polygons is a method of integrating point data to calculate a global parameter, such as plume mass. This method partitions the interpolation area (i.e., groundwater plume) into subregions associated with each monitoring location ( George and Borouchaki 1998[5M9IISB4] George, Paul L., and Houman Borouchaki. 1998. Delaunay Triangulation and Meshing : Application to Finite Elements. Paris : Hermès,. ) (Figure A-4). The concentration in a subregion is defined by the concentration in the associated monitoring well, and the mass in the subregion is equal to the concentration multiplied by the area, thickness, and porosity of the subregion. The total mass in the groundwater plume is the summation of the masses in all subregions. The average concentration of the plume is determined by multiplying the concentration in each subregion by the area of the subregion, then dividing the sum of those values by the total area of the groundwater plume. Delaunay triangulation cannot extrapolate beyond the boundaries of the data. In addition, this method does not interpolate concentration between monitoring locations; instead, it applies the same concentration over the entire spatial extent of a triangle. Consequently, this method can lead to overestimation of plume mass and concentration when the well network in not adequately spaced (i.e., large distances between wells) or when wells are not located in critical areas (e.g., hot spots or areas of low concentration between hot spots).

Figure A-4. Example of Delaunay triangles and Voronoi diagrams.

Source: E. DiFilippo, S.S. Papadopulos & Associates, Inc. Used with permission.

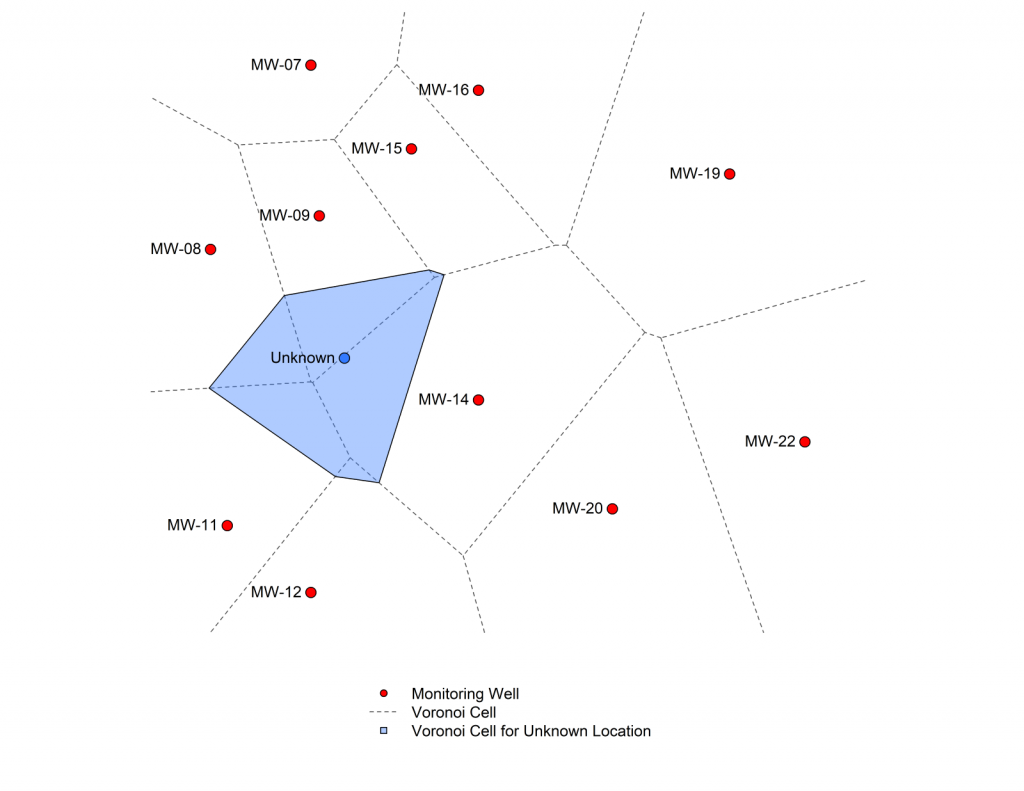

Natural neighbor interpolation using Voronoi polygons estimates the concentration at an unobserved location based on weighted values of its nearest neighbors ( ITRC 2016[BQHK82Z8] ITRC. 2016. “Long-Term Contaminant Management Using Institutional Controls.” Washington, D.C.: Interstate Technology & Regulatory Council, Long-Term Contaminant Management Using Institutional Controls Team. https://institutionalcontrols.itrcweb.org/. ). Similar to the Delaunay triangulation method, this method partitions the interpolation area (i.e., groundwater plume) into subregions associated with each monitoring location. Next, a new polygon is constructed for the unknown location. The proportion of overlap between the polygon for the unknown location and the subregions associated with the nearby monitoring locations defines the interpolation weights for each neighboring data point (Figure A-5). Similar to Delaunay triangulation, the natural neighbor method cannot extrapolate beyond the boundaries of the data. This method also provides poor results along the edges of the interpolation extent, as it only uses the averages of the available data.

Figure A-5. Example of nearest neighbor interpolation. The Voronoi cell of the unknown location is composed of roughly 1% MW-12, 15% MW-08, 20% MW-11, 30% MW-09, and 34% MW-14.

Source: E. DiFilippo, S.S. Papadopulos & Associates, Inc. Used with permissio

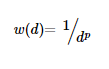

Inverse-distance weighting (IDW) interpolates the concentration at an unobserved location based on the distance to its nearest neighbors ( Shepard 1968[4AZ3N7PM] Shepard, Donald. 1968. “A Two-Dimensional Interpolation Function for Irregularly-Spaced Data.” In Proceedings of the 1968 23rd ACM National Conference, 517–24. ACM ’68. New York, NY, USA: Association for Computing Machinery. https://doi.org/10.1145/800186.810616. ; ITRC 2016[43Z6S9G7] ITRC. 2016. “Geospatial Analysis for Optimization at Environmental Sites.” Washington, D.C.: Interstate Technology & Regulatory Council, Geospatial Analysis for Optimization at Environmental Sites Team. https://gro-1.itrcweb.org/. ). This interpolation method assumes that neighbors that are in close proximity to the unobserved location exert a stronger influence than neighbors that are farther away. A weighting function is used to apply a multiplier to the measured data points used in the interpolation:

where w(d) is the weight for data points at a distance of d, and p is the weighting power ( ITRC 2016[43Z6S9G7] ITRC. 2016. “Geospatial Analysis for Optimization at Environmental Sites.” Washington, D.C.: Interstate Technology & Regulatory Council, Geospatial Analysis for Optimization at Environmental Sites Team. https://gro-1.itrcweb.org/. ). The weighting power is user-specified; higher weighting powers diminish the effect of faraway data on the interpolated value ( ITRC 2016[43Z6S9G7] ITRC. 2016. “Geospatial Analysis for Optimization at Environmental Sites.” Washington, D.C.: Interstate Technology & Regulatory Council, Geospatial Analysis for Optimization at Environmental Sites Team. https://gro-1.itrcweb.org/. ). IDW is an exact interpolator—which means it precisely reproduces the known values at sampled locations—and is relatively easy and quick to implement ( Mueller et al. 2004[NB8FXX6U] Mueller, T. G., N. B. Pusuluri, K. K. Mathias, P. L. Cornelius, R. I. Barnhisel, and S. A. Shearer. 2004. “Map Quality for Ordinary Kriging and Inverse Distance Weighted Interpolation.” Soil Science Society of America Journal 68 (6): 2042–47. https://doi.org/https://doi.org/10.2136/sssaj2004.2042.Kravchenko and Bullock 1999[U9CT28VC] Kravchenko, Alexandra, and Donald G. Bullock. 1999. “A Comparative Study of Interpolation Methods for Mapping Soil Properties.” Agronomy Journal 91 (3): 393–400. https://doi.org/https://doi.org/10.2134/agronj1999.00021962009100030007x. ITRC 2016[43Z6S9G7] ITRC. 2016. “Geospatial Analysis for Optimization at Environmental Sites.” Washington, D.C.: Interstate Technology & Regulatory Council, Geospatial Analysis for Optimization at Environmental Sites Team. https://gro-1.itrcweb.org/. ).

Kriging is the process of estimating the value of a property at a specific location by interpolating known (sampled) values that are available within the vicinity ( Kitanidis and Shen 1996[SG5BSVWA] Kitanidis, P. K., and K. Shen. 1996. “Geostatistical Interpolation of Chemical Concentration.” Washington D.C.: American Chemical Society. https://cfpub.epa.gov/si/si_public_record_Report.cfm?Lab=NCER&dirEntryID=69461.Delhomme 1978[HF6PFVJZ] Delhomme, J. P. 1978. “Kriging in the Hydrosciences.” Advances in Water Resources 1 (5): 251–66. https://doi.org/https://doi.org/10.1016/0309-1708(78)90039-8. ). Similar to IDW, kriging is an exact interpolator. All kriging methods first require development of a covariance model, or semi-variogram, which is a measure of the spatial dependence between two observations as a function of the distance between them and the orientation of the line connecting them. Ordinary kriging interpolates at an unobserved location based on the covariance model, assuming a normal distribution of the measured data. In quantile kriging, the interpolation is performed on a uniform-score rank transformation of the raw data, allowing for transformation into a normally distributed data set that can then be interpolated using ordinary kriging. While kriging is more computationally intensive than IDW or natural neighbor interpolation, it provides a more accurate representation of spatial data because it considers spatial structure such as anisotropy and provides a kriging variance that can identify areas of spatial uncertainty ( Mueller et al. 2004[NB8FXX6U] Mueller, T. G., N. B. Pusuluri, K. K. Mathias, P. L. Cornelius, R. I. Barnhisel, and S. A. Shearer. 2004. “Map Quality for Ordinary Kriging and Inverse Distance Weighted Interpolation.” Soil Science Society of America Journal 68 (6): 2042–47. https://doi.org/https://doi.org/10.2136/sssaj2004.2042.Kravchenko and Bullock 1999[U9CT28VC] Kravchenko, Alexandra, and Donald G. Bullock. 1999. “A Comparative Study of Interpolation Methods for Mapping Soil Properties.” Agronomy Journal 91 (3): 393–400. https://doi.org/https://doi.org/10.2134/agronj1999.00021962009100030007x. ).

Similar to temporal local regression, spatial local regression estimates the concentration at a given location as a weighted average of its nearest neighbors. This method requires a user-specified smoothing factor, with larger smoothing factors producing more smoothing. Unlike kriging, spatial local regression does not require development of a covariance model and does not assume that sample data were measured without error. Because spatial local regression is a smoothing technique, best fit lines may not coincide with any specific measured location, as is the case in kriging or IDW methods, which are exact interpolators.

The calculated zeroth moment can be highly variable due to fluctuating concentrations at wells exhibiting high concentrations, the number of wells in the monitoring network, or results of historical monitoring. Under conditions of high variability, the plume should be adequately defined for mass estimates to be considered for the moment analyses.

A.3.8.2 First Moment (Center of Plume Mass)

The first moment provides an estimate of the mean solute velocity and can be used to evaluate the rate of movement of the center of mass of the plume. Spatial and temporal trends associated with the center of mass may indicate whether the plume is migrating downgradient or if its movement is based on seasonal rainfall or other hydraulic considerations (such as source removal or remediation). If the plume’s center of mass has not moved significantly, this generally indicates plume mass stability.

A.3.8.3 Second Moment (Plume Dispersion)

The second moment provides an estimate of the dispersion, or spread, of the plume. An increasing trend indicated by the second moment analysis indicates an expanding plume, whereas a decreasing trend indicates a shrinking plume; different trends may apply in either direction (e.g., increasing or decreasing).

A.3.9 Monitoring-Well Optimization

Most complex sites have established sampling and analysis plans that outline a long-term monitoring program. These plans are usually developed based on the CSM and the regulatory program governing the site (e.g., CERCLA, RCRA, and/or state-specific programs). These plans can include topics such as monitoring objectives, data quality objectives, quality assurance plans, and decision criteria. The objectives of the monitoring program may change over time due to changes in site conditions or the acquisition of additional data and may need to be revisited on a regular basis (e.g., annual or five-year review periods). The goal of monitoring-well optimization is to improve monitoring efficiency and subsequently reduce the costs associated with these sampling and analysis plans. Depending on the regulatory framework under which monitoring is performed, this goal can be achieved by any of the following actions:

- reducing sampling frequency of the monitoring network

- reducing the number of wells in the monitoring network

- reducing the reporting requirements

- reducing the number of COCs that are analyzed for

- changing to more-efficient and less-expensive sampling and analysis methods

Monitoring-well optimization evaluations can consist of qualitative analyses, quantitative analyses, or a combination of both. These analyses may be as simple as proposing modifications based on a review of historical data or as complex as developing statistical or deterministic (e.g., fate-and-transport model) models of the site and then conducting a formal optimization analysis. Generally, quantitative monitoring-well optimization analyses consist of temporal and/or spatial statistical methods. Purely quantitative monitoring-well optimization evaluations are rarely possible, as most sites exhibit features that require subjective consideration. Several monitoring-well optimization approaches combine the results of different qualitative and/or quantitative analyses for monitoring optimization.

Decision-making algorithms are used for monitoring-well optimization as a way of synthesizing the results from multiple types of analyses to derive an optimized sampling plan. Two types of decision-making algorithms are used in monitoring-well optimization: (1) heuristic or rule-of-thumb and (2) mathematical.

A.3.10 Plume Stability Evaluation

Numerous USEPA guidance documents present methods for assessing remediation performance for contaminated groundwater, evaluating the progress toward attainment of RAOs, and developing site closure strategies ( USEPA 2015[CPNKFR5X] USEPA. 2015. “Use of Monitored Natural Attenuation for Inorganic Contaminants in Groundwater at Superfund Sites.” U.S. Environmental Protection Agency, Office of Solid Waste and Emergency Response. https://nepis.epa.gov/Exe/ZyPDF.cgi/P100ORN0.PDF?Dockey=P100ORN0.PDF.USEPA 2010[7SEPKMZ6] USEPA. 2010. “Monitored Natural Attenuation of Inorganic Contaminants in Ground Water. Volume 3: Assessment for Radionuclides Including Tritium, Radon, Strontium, Technetium, Uranium, Iodine, Radium, Thorium, Cesium, and Plutonium-Americium.” U.S. Environmental Protection Agency, Office of Research and Development. https://archive.epa.gov/ada/web/pdf/p100ebxw.pdf.USEPA 2007[4BA5PG9C] USEPA. 2007. “Monitored Natural Attenuation of Inorganic Contaminants in Ground Water. Volume 2: Assessment for Non-Radionuclides Including Arsenic, Cadmium, Chromium, Copper, Lead, Nickel, Nitrate, Perchlorate and Selenium.” U.S. Environmental Protection Agency, Office of Research and Development. https://nepis.epa.gov/Exe/ZyPDF.cgi/60000N76.PDF?Dockey=60000N76.PDF.USEPA 2007[SNCZ8IV9] USEPA. 2007. “Monitored Natural Attenuation of Inorganic Contaminants in Ground Water. Volume 1: Technical Basis for Assessment.” U.S. Environmental Protection Agency, Office of Research and Development. http://nepis.epa.gov/Adobe/PDF/60000N4K.pdf.USEPA 2003[LBNRHNTE] USEPA. 2003. “Performance Monitoring of MNA Remedies for VOCs in Ground Water.” U.S. Environmental Protection Agency, Office of Research and Development. https://nepis.epa.gov/Exe/ZyPDF.cgi/10004FKY.PDF?Dockey=10004FKY.PDF.USEPA 1998[Y4Q3F3Q8] USEPA. 1998. “Technical Protocol for Evaluating Natural Attenuation of Chlorinated Solvents in Ground Water.” https://clu-in.org/download/remed/protocol.pdf.USEPA 1999[R85TDFJZ] USEPA. 1999. “Use of Monitored Natural Attenuation at Superfund, RCRA Corrective Action, and Underground Storage Tank Sites.” Office of Solid Waste and Emergency Response. https://www.epa.gov/sites/default/files/2014-02/documents/d9200.4-17.pdf. ). One of the most significant and necessary pieces of information to transition P&T is an understanding of the stability of the plume without pumping. A plume stability evaluation is performed to determine two things:

- whether the plume is migrating, stable, or receding

- a delineation of the extent of the plume at steady state without pumping

If the plume is determined to be stable or receding and lies within an acceptable horizontal and vertical geospatial extent, then MNA is an amenable transition approach. To the contrary, if the plume is found to be migrating/expanding horizontally and/or vertically or the steady-state position of the plume is unacceptable, then an alternate transition approach is needed. This does not mean that MNA cannot be used, but it means that other approaches, such as in situ treatment or engineering/administrative controls, will be required to stabilize and/or pull back the plume to the extent needed prior to transition to MNA in a treatment train approach.

It is difficult to prove stability, or acceptable plume expansion, without turning off the groundwater extraction pumps. All data collected from within the capture zone during P&T operation is hydraulically biased and not representative of ambient/natural conditions and cannot be used for plume stability evaluation. Nevertheless, there may be some data that is of use, as discussed below.

Two important notes prior to proceeding with a plume stability evaluation:

- If NAPL is present at the site, and unless NAPL stability was previously proven, then the plume stability evaluation should include both the NAPL and dissolved phases. The text below focuses on dissolved-phase COC stability. A document from ITRC ( ITRC 2018[SMJT9P55] ITRC. 2018. “Light Non-Aqueous Phase Liquid (LNAPL) Site Management: LCSM Evolution, Decision Process, and Remedial Technologies.” Washington D.C.: Interstate Technology & Regulatory Council, LNAPL Update Team. https://lnapl-3.itrcweb.org/. ) is a good reference for guidance on how to demonstrate LNAPL stability, and another ITRC document ( ITRC 2020[JWKDF22N] ITRC. 2020. “Integrated DNAPL Site Strategy.” Washington D.C.: Interstate Technology & Regulatory Council, IDSS Team. https://idss-2.itrcweb.org/. ) is a good reference on how to prove DNAPL stability.

- It is not necessary to perform a plume stability evaluation if unacceptable plume instability is already a foregone conclusion. In this case, the project progresses to consider P&T transition to in situ treatment (likely utilizing some components of the P&T system to retain control during treatment).

A.3.10.1 Use of Existing Monitoring Data for Plume Stability Evaluation

As part of the plume stability evaluation, reevaluate the actual capture zone of the P&T system and discern whether any of the groundwater monitoring data were consistently collected outside of it (either laterally or vertically in a hydraulically separate groundwater zone). Monitoring data collected outside the hydraulic zone of influence could be used to evaluate plume stability if it is free of bias from the P&T system hydraulics.

Other sources of existing data that could be used to assess plume stability without P&T are monitoring results collected during past P&T shutdown periods. P&T shutdowns occur for various reasons, including power outages or equipment failures. Some are not useful because they were too short (e.g., days) or groundwater monitoring was not performed. Other shutdowns with more severe causes and longer durations (e.g., weeks to months), during which groundwater monitoring was continued, could be useful. In fact, it is prudent to plan ahead for plume stability monitoring during unforeseen long-duration shutdowns when site access is safe. These data will likely be useful to help inform the timing of the P&T transition, if necessary.

Once existing data are discovered, a data quality evaluation must be performed to ensure that it meets the needs of the plume stability evaluation data analysis (see Section 5.4.4).

A.3.10.2 Modeling to Evaluate Plume Stability

Absent existing data, the only other option to perform a plume stability evaluation without shutdown of the P&T system is groundwater fate-and-transport modeling. With an appropriate level of detail in the refined CSM, analytical or numerical modeling can be performed to evaluate the fate of COCs when the groundwater extraction pumps are turned off. The model can be calibrated using existing hydraulic and COC concentration performance monitoring data collected during P&T operation. Future COC plume stability can then be evaluated by turning off the pumping in the model and observing how the hydraulic reversion to natural gradients affects the plume disposition. In addition to the CSM elements (e.g., lithologic layering, hydrogeologic parameters), the modeling also requires site-specific COC decay rates so that the fate-and-transport predictions account for the naturally occurring processes of biodegradation, dilution, volatilization, and dispersion. Redox conditions must be considered to ensure that the appropriate decay rates are used and are representative of post-shutdown conditions.

Before embarking on groundwater modeling, the first question to ask is whether modeling, rather than plume stability evaluation via groundwater extraction shutdown (see Section 5.4.4), is preferable. Modeling would be preferred in a situation with ample doubts about plume stability without pumping and site conditions are conducive to modeling or prior successful modeling has been done at the site. Modeling data requirements vary; therefore, it is important to balance the level of modeling with the information available for the site. Insufficient site-specific input will result in a high degree of uncertainty in the model results.

Various models are available to predict plume fate and transport. See Section A-2 for additional information on fate-and-transport modeling and suggested computer models.

A.3.10.3 Plume Stability Evaluation Data Collection Planning

If no or insufficient existing data are available and modeling is determined to be impractical/unnecessary or has overly uncertain results, then new data must be collected to evaluate plume stability and ascertain the re-equilibrated steady-state position of the plume without pumping.

A plume stability evaluation plan, typically approved by stakeholders, is prepared to govern site activities during shutdown of the groundwater extraction system. Its primary purpose is to ensure that conditions during the shutdown are carefully monitored, and appropriate actions are taken (e.g., restart of extraction) if conditions require it. The plan prescribes the scope of the shutdown (e.g., number and location of wells to be turned off), schedule (e.g., shutdown of all wells at once or sequential shutdown and duration), monitoring program, and contingency actions in case unacceptable conditions (see Section 5.4.4) occur. The plan can include frequent initial monitoring (e.g., monthly) and a progressively reduced frequency (e.g., quarterly) as the change in the plume disposition becomes more predictable. The frequency of monitoring depends on site-specific groundwater hydraulics and re-equilibration kinetics. The duration of the plume stability evaluation will vary from short (e.g., 6 months) for high-conductivity sites with rapid kinetics to long (e.g., multiple years) for low-conductivity sites with slow kinetics. Of course, the duration of the shutdown can be ended at any time or when an unacceptable condition occurs. Plume stability monitoring typically requires routine participation from stakeholders to review the data and make continuation/cessation decisions on the shutdown. If monitoring results continue to indicate acceptable plume stability, then the duration of the shutdown may be extended until the transition remedy is determined and implemented.

A.3.10.4 Plume Stability Evaluation via Groundwater Extraction Shutdown

If no or insufficient existing data are available and modeling is determined to be impractical/unnecessary or has overly uncertain results, then shutdown of groundwater extraction must be performed to evaluate plume stability. As stated at the start of this subsection, this is one of the most significant and necessary pieces of information to transition P&T systems that are demonstrated ineffective for achieving the RAOs. Its primary purpose is to determine whether a plume is migrating, stable, or receding without pumping. Then, if a stable or receding plume is observed after complete shutdown of all extraction wells, its results can also be used as direct evidence that the remaining mass discharge from the source or plume can be assimilated by natural attenuation for control of the plume. If a receding plume is observed after the shutdown of all extraction wells, then MNA may possibly be capable of meeting the RAOs within a reasonable time frame.

A simple pragmatic method to evaluate the viability and impact of transitioning off a P&T system is to shut down groundwater extraction (in portions or for the entire the site) for an agreed duration of time and routinely monitor the COC concentrations at nearby surrounding and downgradient monitoring wells. The shutdown duration should be designed to be as long as necessary to establish post-P&T shutdown steady-state COC distribution (this assumes steady-state hydraulics will be established before COC re-equilibrium) or be discontinued when an unacceptable condition is encountered (see Section 5.4.4).

One of the primary advantages of performing extraction well shutdown to evaluate plume stability is that it allows for the collection of empirical data describing the subsurface response to termination of pumping as part of the P&T transition process. The results then become part of (a line of evidence) the stakeholder requirements for modification of applicable decision documents (e.g., ESD or ROD amendment). The plume stability evaluation plan maintains a safety net for resumption of groundwater extraction should the results of the monitoring prove unacceptable. In so doing, it is a low risk–high reward effort for all stakeholders.

If the results of the plume stability evaluation indicate unacceptable conditions, such as a mass discharge that cannot completely be assimilated by MNA, then that does not necessarily mean P&T should be restarted. Rather, the project team should move to the next plausible transition technology, either in situ treatment (see Section 5.4.6) or engineering/administrative controls (see Section 5.4.7), if stakeholders allow.

A.3.10.5 Plume Stability Evaluation Data Analysis

Data analysis during a plume stability evaluation consists of the following:

- determining unacceptable conditions

- determining migrating, stable, or receding COC plume condition

- identifying when the plume is re-equilibrated and at steady state and the end of the plume stability evaluation

A.3.10.5.1 Determining Unacceptable Conditions

As part of the planning phase of the plume stability evaluation (see Section 5.4.4), the project team determines the unacceptable conditions that will trigger restart of groundwater extraction or other contingency response action to mitigate unacceptable plume migration. Unacceptable conditions can be organized into primary and secondary categories. Primary unacceptable conditions are more serious in nature up to and including restart of groundwater extraction. Secondary unacceptable conditions are early warning types of conditions that are potentially indicative of future primary unacceptable conditions. Response actions to address secondary unacceptable conditions typically include more-intensive monitoring and data evaluation.

Example unacceptable conditions are listed below:

- Primary 1—Off-site migration of multiple COCs as indicated by a new observation of a concentration that exceeds the cleanup criteria in wells without prior exceedances.

- Primary 2—On-site migration of multiple COCs (distal) as indicated by a new observation of a concentration that exceeds the cleanup criteria in wells without prior exceedances located at a downgradient planar boundary protective of human health and the environment.

- Secondary 1—On-site dissolved COC migration as indicated by a new observation of a concentration that exceeds the cleanup level in downgradient wells without prior exceedances.

- Secondary 2—On-site dissolved COC migration as indicated by an observation of a concentration that exceeds the cleanup level and statistically increasing concentration trends in downgradient wells proximal to the NAPL footprint without prior exceedances.

- Secondary 3—On-site dissolved COC migration as indicated by geospatial plume moment analysis that shows a statistically significant increase in COC plume mass and a consistently downgradient advancing center of mass that has traveled greater than 30% of the baseline plume length.